Getty drops primary claims against Stable Diffusion in AI lawsuit after 'failing to establish a sufficient connection between the infringing acts and the UK jurisdiction for copyright law to bite'

They still have the seocondary infringements against the AI model itself to take to court.

The burgeoning world of AI tech is currently the centre of controversy and confusion around the world. On the one hand we have wonderful uses for AI like making our PC gaming even better, or hunting down bugs, and on the other we have… well... all the other stuff. AI art is particularly contentious as it's trained off the hard work of others without their consent or compensation which is why it's no surprise we're seeing interesting twists and turns in lawsuits like what's happening with image database company Getty's claims against Stability AI.

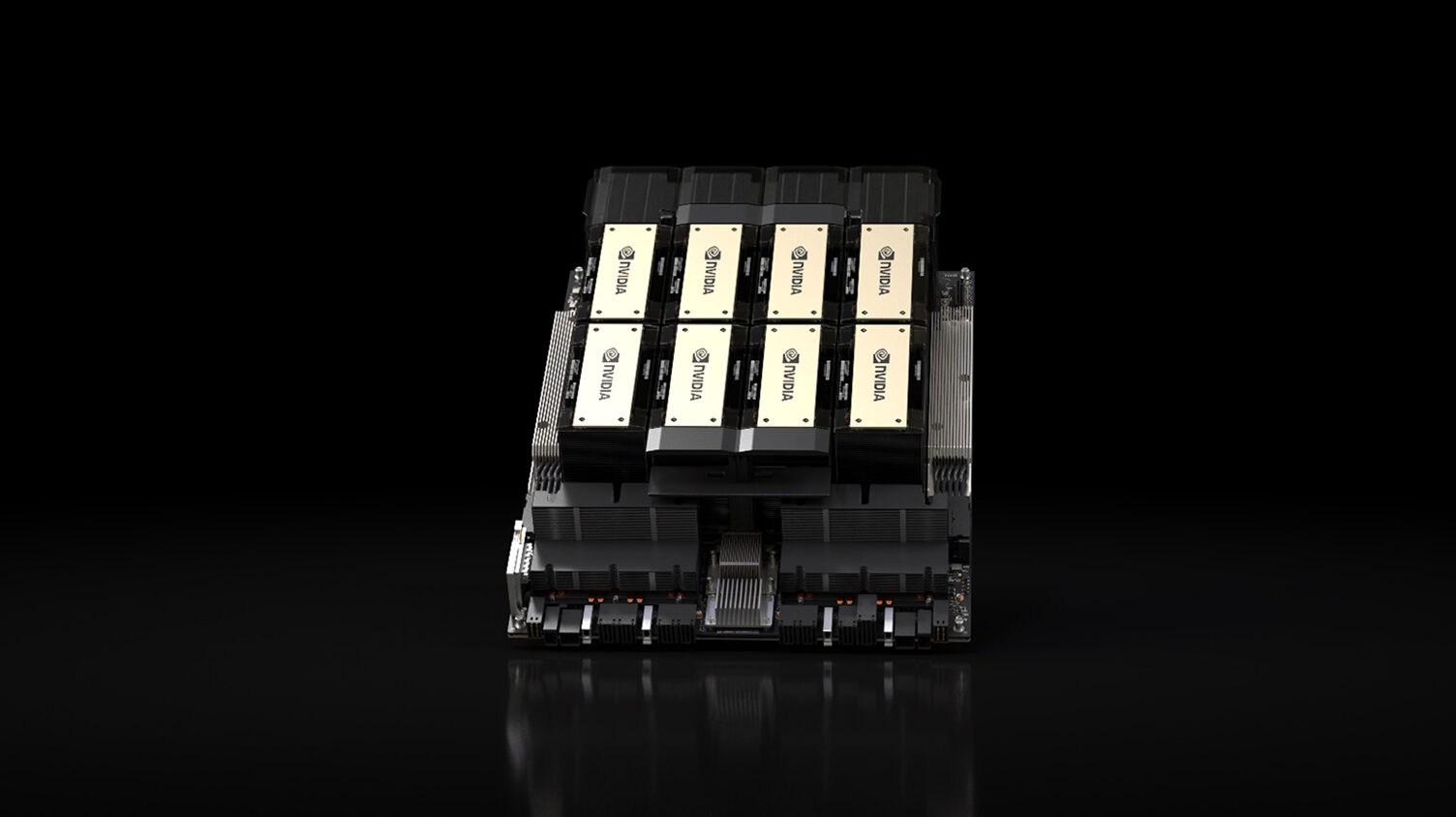

Getty Images began legal proceedings in the UK against Stable Diffusion, Stability AI's art tool back in January 2023. It claimed that Stability AI was trained on millions of copyrighted images to deliver its results Getty stated that the AI's images were incredibly similar to copyrighted pictures, including stating that some of the generated images even had watermarks still attached.

It's worth noting this isn't Getty Images fighting the human fight against AI for the efforts of its photographers, though it's long been worried about the legal implications of AI. Getty has its own AI powered image generator that is trained on its library of images and video, so it has no interest in going against AI as a concept. Merely in protecting what it believes are its assets and in turn, profits.

As one of the more prominent cases of its kind, it has been a closely watched case by all who're interested in seeing what precedents this sets for the use of AI in the future. According to TechCrunch this all took a new turn at London's High Court on Wednesday when Getty Images dropped its primary claims of copyright infringement for the case.

In closing arguments Getty's lawyers said the claims were dropped as a strategic move to focus on stronger aspects of the case. Stating that for these claims the evidence was weak and knowledgeable witnesses from Stability AI were hard to come by. It's incredibly common for lawyers to drop claims they don't believe can be substantiated in court in favour of not weakening the other arguments by tying them to unprovable positions. A bit like what happened in the Meta AI case.

“The training claim has likely been dropped due to Getty failing to establish a sufficient connection between the infringing acts and the UK jurisdiction for copyright law to bite,” Ben Maling, a partner at law firm EIP, told TechCrunch. “Meanwhile, the output claim has likely been dropped due to Getty failing to establish that what the models reproduced reflects a substantial part of what was created in the images (e.g. by a photographer).”

This isn't the end of Getty's lawsuit against Stability AI as the company has other claims, but it does appear to take a fair few of the teeth out of the argument. It also seems to highlight the uncertainty around AI's place and what is legal when it comes to the use and training of these bots. In a field so new experts are often harder to come by that aren't actively working for companies providing these solutions.

Instead, Getty is focussing on the secondary infringement claim as well as going after trademark infringement for the lawsuit. This goes after the AI models themselves, inferring that they could breach UK law.

“Secondary infringement is the one with widest relevance to genAI companies training outside of the UK, namely via the models themselves potentially being ‘infringing articles’ that are subsequently imported into the UK,” Maling said.

These changes won't affect the concurrent case Getty's U.S. wing has against Stability AI for trademark and copyright infringement. It was also started early in 2023 and sings a similar tune to the UK lawsuit, which is seeking a total $1.7 billion in damages after claiming Stability AI used up to 12 million copyrighted images to train its model.

While everyone holds their breath for these kinds of lawsuits to finish and establish president, it feels like we might be a fair while off. Most cases levelled against AI seem to struggle with finding arguements that work within the current laws. Whether we need better laws to help deal with AI or just better arguements remains to be seen, alongside what further implications these will have to other aspects of AI tech beyond just image genration.